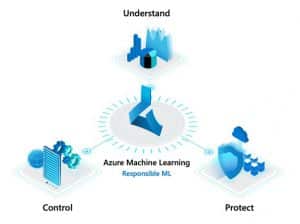

As AI reaches critical momentum across industries and applications, it becomes essential to ensure the safe and responsible use of AI. AI deployments are increasingly impacted by the lack of customer trust in the transparency, accountability, and fairness of these solutions. Microsoft is committed to the advancement of AI and machine learning, driven by principles that put people first, and tools to enable this in practice.

As Machine Learning becomes deeply integrated into our daily business processes, transparency is critical. Azure Machine Learning helps you to not only understand model behaviour but also assess and mitigate unfairness.

Model interpretability capabilities in Azure Machine Learning, powered by the InterpretML toolkit, enable developers and data scientists to understand model behaviour and provide model explanations to business stakeholders and customers.

Use model interpretability to:

A challenge with building AI systems today is the inability to prioritise fairness. Using Fairlearn with Azure Machine Learning, developers and data scientists can leverage specialised algorithms to ensure fairer outcomes for everyone.

Use fairness capabilities to:

Machine Learning is increasingly used in scenarios that involve sensitive information like medical patient or census data. Current practices, such as redacting or masking data, can be limiting for Machine Learning. To address this issue, differential privacy and confidential machine learning techniques can be used to help organisations build solutions while maintaining data privacy and confidentiality.

Using the new WhiteNoise differential privacy toolkit with Azure Machine Learning, data science teams can build Machine Learning solutions that preserve privacy and help prevent reidentification of an individual’s data.

Differential privacy protects sensitive data by:

In addition to data privacy, organisations are looking to ensure security and confidentiality of all Machine Learning assets.

To enable secure model training and deployment, Azure Machine Learning provides a strong set of data and networking protection capabilities. These include support for Azure Virtual Networks, private links to connect to ML workspaces, dedicated compute hosts, and customer managed keys for encryption in transit and at rest.

Building on this secure foundation, Azure Machine Learning also enables data science teams at Microsoft to build models over confidential data in a secure environment, without being able to see the data. All ML assets are kept confidential during this process. This approach is fully compatible with open source ML frameworks and a wide range of hardware options.

To build responsibly, the ML development process should be repeatable, reliable, and hold stakeholders accountable. Azure Machine Learning enables decision makers, auditors, and everyone in the ML lifecycle to support a responsible process.

Azure Machine Learning provides capabilities to automatically track lineage and maintain an audit trail of ML assets. Details—such as run history, training environment, and data and model explanations—are all captured in a central registry, allowing organisations to meet various audit requirements.

Datasheets provide a standardised way to document ML information such as motivations, intended uses, and more. Microsoft led research on datasheets, to provide transparency to data scientists, auditors and decision makers. The custom tags capability in Azure Machine Learning can be used to implement datasheets today and over time we will release additional features.

In addition to the new capabilities in Azure Machine Learning and open-source tools, Microsoft have also developed principles for the responsible use of AI. The new responsible ML innovations and resources are designed to help developers and data scientists build more reliable, fairer, and trustworthy ML.

Join us for a day as we host an Azure Data Analytics & Machine Learning bootcamp!